Deep Learning Notes I - Introduction & Math Review

SVD, MLE, Entropy

All modern machine learning algorithms are just nearest neighbors. It’s only that the neural networks are telling you the space in which to compute the distance.

Linear Algebra

Woodbury Identity

\[(A + UCV)^{-1} = A^{-1} - A^{-1}U(C^{-1}+VA^{-1}U)V^{-1}\]其中

\[A\in\mathbb{R}^{n\times n}, C\in\mathbb{R}^{k\times k}, k\ll n\]如果 \(A\) 的逆很好算,那这样变换会大大降低计算量。

Matrix Derivatives

向量 / 标量

\[f(x + \Delta) = f(x) + \frac{\partial f}{\partial x}\Delta + o(\Vert\Delta\Vert)\] \[\nabla f = \left(\frac{\partial f}{\partial x}\right)^\top\]所以假设说 \(f: \mathbb{R}^{n}\rightarrow \mathbb{R}\),我们就应该有

\[\begin{aligned} \frac{\partial f}{\partial x} &= \begin{bmatrix} \frac{\partial f}{\partial x_1} & \frac{\partial f}{\partial x_1} & \ldots & \frac{\partial f}{\partial x_n} \end{bmatrix}&&\in \mathbb{R}^{1\times n}\\ \nabla f &= \left(\frac{\partial f}{\partial x}\right)^\top = \begin{bmatrix} \frac{\partial f}{\partial x_1} \\ \frac{\partial f}{\partial x_1} \\ \vdots \\ \frac{\partial f}{\partial x_n} \end{bmatrix}&&\in \mathbb{R}^{n} \end{aligned}\]标量 / 矩阵

同样的,对于 \(f: \mathbb{R}^{m\times n}\rightarrow \mathbb{R}\),我们有:

\[\left(\frac{\partial f}{\partial X}\right)_{ij} = \frac{\partial f}{\partial X_{ji}}\]酱紫

\[f(X + \Delta) = f(X) + \mathrm{Tr}\left(\frac{\partial f}{\partial X}\Delta\right) + o(\Vert \Delta\Vert)\]Jacobian: 向量 / 向量

假设函数是 \(z: \mathbb{R}^{d}\rightarrow \mathbb{R}^{k}\),我们想要有

\[z(x+\Delta) = z(x) + J(z) \Delta + o(\Vert\Delta\Vert)\]所以其实我们可以看成是 \(z\) 的每行单独拆开来嘛,也就是

\[J(z) = \frac{\partial z}{\partial x} = \begin{bmatrix} \frac{\partial z_1}{\partial x}\\ \frac{\partial z_2}{\partial x}\\ \vdots\\ \frac{\partial z_k}{\partial x} \end{bmatrix}=\begin{bmatrix} \frac{\partial z_1}{\partial x_1} & \frac{\partial z_1}{\partial x_2} &\ldots& \frac{\partial z_1}{\partial x_d}\\ \frac{\partial z_2}{\partial x_1} & \frac{\partial z_2}{\partial x_2} &\ldots& \frac{\partial z_2}{\partial x_d}\\ \vdots&\vdots&\ddots&\vdots\\ \frac{\partial z_k}{\partial x_1} & \frac{\partial z_k}{\partial x_2} &\ldots& \frac{\partial z_k}{\partial x_d}\\ \end{bmatrix}\] \[[J(z)]_{ij} = \left(\frac{\partial z}{\partial x}\right)_{ij} = \frac{\partial z_i}{\partial x_j}\]Hessian: 二阶导

对于函数 \(f: \mathbb{R}^n\rightarrow \mathbb{R}\),我们想要求二阶导

\[\nabla f(x+\Delta) = \nabla f(x) + \nabla^2 f(x)\Delta + o(\Vert\Delta\Vert)\]所以其实就是

\[H(f) = \nabla^2 f(x) = [J(f(x))]^\top = \begin{bmatrix} \frac{\partial^2 f}{\partial x_1^2} & \frac{\partial^2 f}{\partial x_1\partial x_2} & \ldots & \frac{\partial^2f}{\partial x_1\partial x_n}\\ \frac{\partial^2 f}{\partial x_2\partial x_1} & \frac{\partial^2 f}{\partial x_2^2} & \ldots & \frac{\partial^2f}{\partial x_2\partial x_n}\\ \vdots&\vdots&\ddots&\vdots\\ \frac{\partial^2 f}{\partial x_n\partial x_1} & \frac{\partial^2 f}{\partial x_n\partial x_2} & \ldots & \frac{\partial^2f}{\partial x_n^2} \end{bmatrix}\]Derivative Rules

我们先来算 \(\frac{\partial}{\partial x}(AB)\),考虑到

\[\begin{aligned} \left[\frac{\partial}{\partial x}(AB)\right]_{ij} &= \frac{\partial}{\partial x}(AB)_{ij} = \frac{\partial}{\partial x}\sum_{k}A_{ik}B_{kj} \\&= \sum_{k}\left(\frac{\partial A_{ik}}{\partial x}B_{kj} + A_{ik}\frac{\partial B_{kj}}{\partial x}\right)\\&=\frac{\partial A}{\partial x}B + A\frac{\partial B}{\partial x} \end{aligned}\]将 \(A^{-1}A=I\) 代入上式可以得到

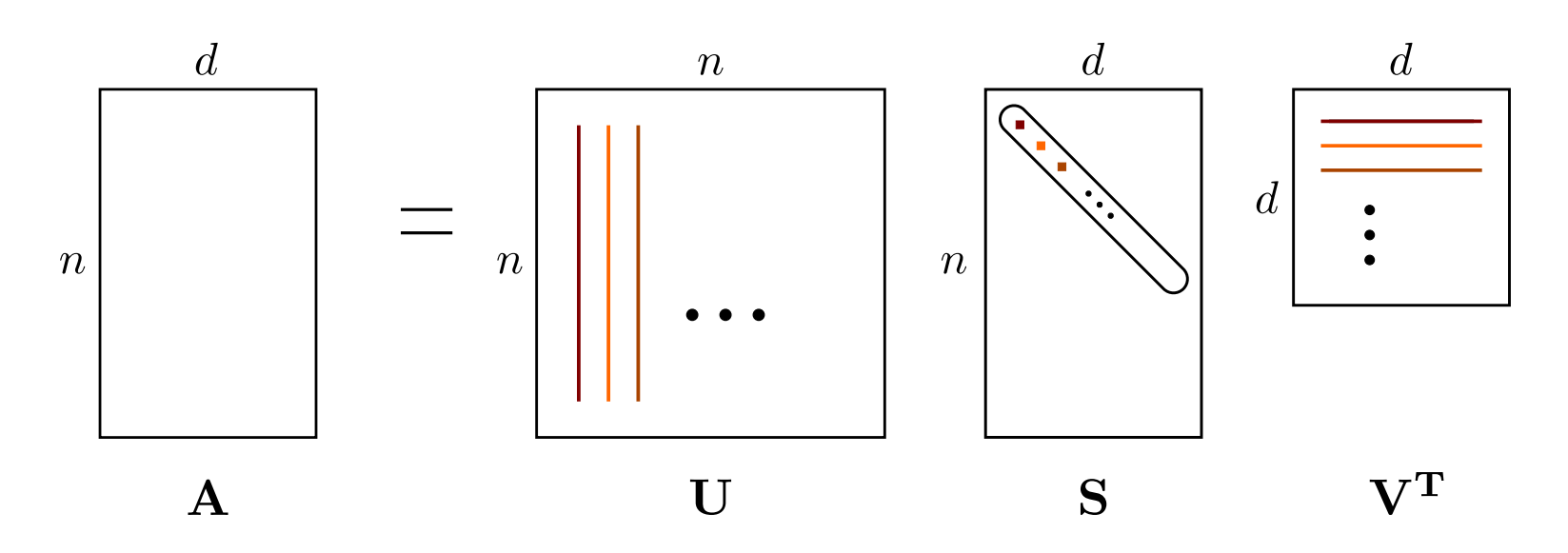

\[\frac{\partial}{\partial x}A^{-1} = -A^{-1}\frac{\partial A}{\partial x}A^{-1}\]SVD

\[A = U \Sigma V^\top=\sum_{i=1}^{\min\{m, n\}}\sigma_i u_i v_i^\top\]

Compute largest \(k\) singular values and vectors: \(\mathcal{O}(kmn)\).

Approximation:

\[\hat{A} = \sum_{i=1}^{k}\sigma_i u_i v_i^\top = U_k \Sigma_k V_k^\top\]For all rank \(k\) matrices \(B\):

\[\|A - \hat{A}\|_F \le \|A - B\|_F\]Calculus of Variations

变分法中,我们考虑的是对于一个函数的函数 \(F(f)\),\(f\) 稍稍改变,\(F\) 就会稍稍改变:

\[F[y(x) + \epsilon \eta(x)] = F[y(x)] + \epsilon\int\frac{\delta F}{\delta y(x)}\eta(x)\mathrm{d}x+\mathcal{O}(\epsilon^2)\]假设

\[F[y] = \int G\left(y(x), y'(x), x\right)\mathrm{d}x\]那么

\[\begin{aligned} \int\frac{\delta F}{\delta y(x)}\eta(x)\mathrm{d}x&= \end{aligned}\]Maximum Likelihood Estimation

Maximum likelihood estimation:

\[\hat{\theta} = \arg\max_{\theta\in\Theta} p(D\mid\theta)\]Properties:

- Consistency: more data, more accurate (but maybe biased).

- Statistically efficient: least variance.

- The value of \(p(D\mid\theta_{\text{MLE}})\) is invariant to re-parameterization.

Entropy

要搞一个 “degree of surprise” 函数 \(h(p(x))\),满足:

- \(h(p) \ge 0\);

- \(h(p) = 0 \iff p = 1\);

- \(x \perp y \iff h(p(x\land y)) = h(p(x)) + h(p(y))\);

- \(h(p_1) > h(p_2)\iff p_1<p_2\).

根据 3 我们有

\[h(p_1 p_2) = h(p_1) + h(p_2)\]如果我们令 \(f(\log p) = h(p)\) 的话,我们有

\[f(\log p_1 + \log p_2) = f(\log p_1) + f(\log p_2)\]所以 \(f(p)\) 是一个线性函数。又因为 \(f(0)=0\),所以 \(f(x)=-c\cdot x\)。\(c>0\) 因为 \(f\) 要单调递减且非负。

所以

\[h(p(x)) = -c\cdot \log p(x)\]通常我们取 \(c=1\) 或 \(c=\frac{1}{\log 2}\)。这边就不管了都写成 \(-\log p(x)\) 了。

于是我们定义

\[\mathcal{H}(x) = \mathbb{E}[h(p(x))] = -\int p(x) \log p(x)\]当然因为 entropy 是从物理来的,他也有一定物理意义。就是我们考虑有 \(N\) 个东西,\(k\) 个状态。第 \(i\) 个状态有 \(n_i\) 个。那么可能的排列数量为

\[W = \frac{N!}{\prod n_i!}\]我们考虑定义 \(\mathcal{H}\) 为 \(N\to\infty\) 时候的状态

\[\begin{aligned} \mathcal{H} &= \lim_{N\to\infty} \frac{1}{N}\log W=-\lim_{N\to\infty}\left(\frac{n_i}{N_i}\right)\log\left(\frac{n_i}{N}\right) \end{aligned}\]其中用到了 Stirling’s approximation

\[\log n! = n\log n - n + \mathcal{O}(\log n)\]Deep Learning Introduction

Risk: expected loss

\[R(\theta) = \mathbb{E}\left[\mathcal{L}(\theta, X, Y)\right]\]Empirical Risk: average loss

\[\hat{R}(\theta) = \frac{1}{N}\sum_{i=1}^N\mathcal{L}(\theta, x_i, y_i)\]Empirical Risk too high: underfitting; too low: overfitting.